[November-2020]Valid DP-100 Dumps Free Download in Braindump2go[Q190-Q212]

2020/November Latest Braindump2go DP-100 Exam Dumps with PDF and VCE Free Updated Today! Following are some new DP-100 Real Exam Questions! QUESTION 190

You create a batch inference pipeline by using the Azure ML SDK.

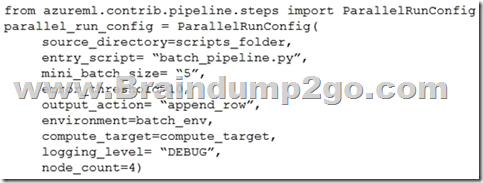

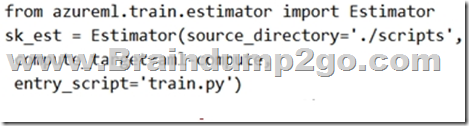

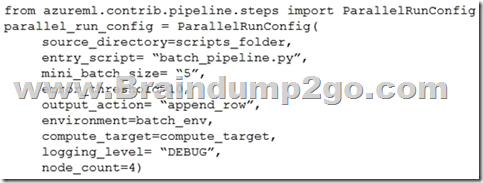

You configure the pipeline parameters by executing the following code:

You need to obtain the output from the pipeline execution.

Where will you find the output? A. the digit_identification.py script

B. the debug log

C. the Activity Log in the Azure portal for the Machine Learning workspace

D. the Inference Clusters tab in Machine Learning studio

E. a file named parallel_run_step.txt located in the output folder Answer: E

Explanation:

output_action (str): How the output is to be organized. Currently supported values are 'append_row' and 'summary_only'.

'append_row' ?All values output by run() method invocations will be aggregated into one unique file named parallel_run_step.txt that is created in the output location.

'summary_only'

Reference:

https://docs.microsoft.com/en-us/python/api/azureml-contrib-pipeline-steps/ 1 azureml.contrib.pipeline.steps.parallelrunconfig QUESTION 191

You use Azure Machine Learning designer to create a real-time service endpoint.

You have a single Azure Machine Learning service compute resource.

You train the model and prepare the real-time pipeline for deployment.

You need to publish the inference pipeline as a web service.

Which compute type should you use? A. a new Machine Learning Compute resource

B. Azure Kubernetes Services

C. HDInsight

D. the existing Machine Learning Compute resource

E. Azure Databricks Answer: B

Explanation:

Azure Kubernetes Service (AKS) can be used real-time inference.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/concept-compute-target 2 QUESTION 192

You plan to run a script as an experiment using a Script Run Configuration. The script uses modules from the scipy library as well as several Python packages that are not typically installed in a default conda environment.

You plan to run the experiment on your local workstation for small datasets and scale out the experiment by running it on more powerful remote compute clusters for larger datasets.

You need to ensure that the experiment runs successfully on local and remote compute with the least administrative effort.

What should you do? A. Do not specify an environment in the run configuration for the experiment. Run the experiment by using the default environment.

B. Create a virtual machine (VM) with the required Python configuration and attach the VM as a compute target. Use this compute target for all experiment runs.

C. Create and register an Environment that includes the required packages. Use this Environment for all experiment runs.

D. Create a config.yaml file defining the conda packages that are required and save the file in the experiment folder.

E. Always run the experiment with an Estimator by using the default packages. Answer: C

Explanation:

If you have an existing Conda environment on your local computer, then you can use the service to create an environment object. By using this strategy, you can reuse your local interactive environment on remote runs.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-environments 3 QUESTION 193

You write a Python script that processes data in a comma-separated values (CSV) file.

You plan to run this script as an Azure Machine Learning experiment.

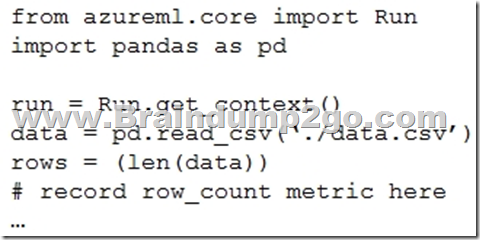

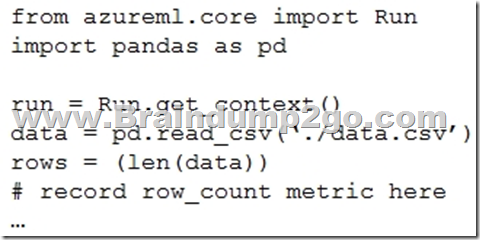

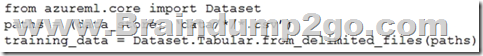

The script loads the data and determines the number of rows it contains using the following code:

You need to record the row count as a metric named row_count that can be returned using the get_metrics method of the Run object after the experiment run completes.

Which code should you use? A. run.upload_file(`row_count', `./data.csv')

B. run.log(`row_count', rows)

C. run.tag(`row_count', rows)

D. run.log_table(`row_count', rows)

E. run.log_row(`row_count', rows) Answer: B

Explanation:

Log a numerical or string value to the run with the given name using log(name, value, description='').

Logging a metric to a run causes that metric to be stored in the run record in the experiment. You can log the same metric multiple times within a run, the result being considered a vector of that metric.

Example: run.log("accuracy", 0.95)

Incorrect Answers:

E: Using log_row(name, description=None, **kwargs) creates a metric with multiple columns as described in kwargs. Each named parameter generates a column with the value specified. log_row can be called once to log an arbitrary tuple, or multiple times in a loop to generate a complete table.

Example: run.log_row("Y over X", x=1, y=0.4)

Reference:

https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.core.run 4 QUESTION 194

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

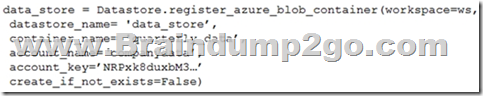

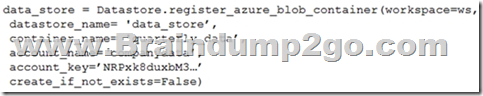

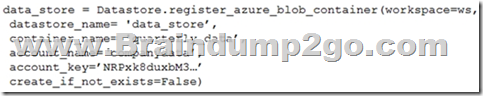

You create an Azure Machine Learning service datastore in a workspace. The datastore contains the following files:

/data/2018/Q1.csv

/data/2018/Q2.csv

/data/2018/Q3.csv

/data/2018/Q4.csv

/data/2019/Q1.csv

All files store data in the following format:

id,f1,f2,I

1,1,2,0

2,1,1,1

3,2,1,0

4,2,2,1

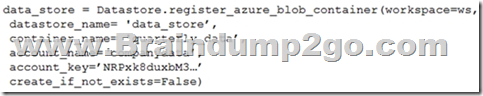

You run the following code:

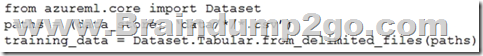

You need to create a dataset named training_data and load the data from all files into a single data frame by using the following code:

Solution: Run the following code:

Does the solution meet the goal? A. Yes

B. No Answer: B

Explanation:

Define paths with two file paths instead.

Use Dataset.Tabular_from_delimeted as the data isn't cleansed.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-register-datasets 7 6 5 QUESTION 195

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create an Azure Machine Learning service datastore in a workspace. The datastore contains the following files:

/data/2018/Q1.csv

/data/2018/Q2.csv

/data/2018/Q3.csv

/data/2018/Q4.csv

/data/2019/Q1.csv

All files store data in the following format:

id,f1,f2,I

1,1,2,0

2,1,1,1

3,2,1,0

4,2,2,1

You run the following code:

You need to create a dataset named training_data and load the data from all files into a single data frame by using the following code:

Solution: Run the following code:

Does the solution meet the goal? A. Yes

B. No Answer: B

Explanation:

Use two file paths.

Use Dataset.Tabular_from_delimeted, instead of Dataset.File.from_files as the data isn't cleansed.

Note:

A FileDataset references single or multiple files in your datastores or public URLs. If your data is already cleansed, and ready to use in training experiments, you can download or mount the files to your compute as a FileDataset object.

A TabularDataset represents data in a tabular format by parsing the provided file or list of files. This provides you with the ability to materialize the data into a pandas or Spark DataFrame so you can work with familiar data preparation and training libraries without having to leave your notebook. You can create a TabularDataset object from .csv, .tsv, .parquet, .jsonl files, and from SQL query results.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-register-datasets 7 6 5 QUESTION 196

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create an Azure Machine Learning service datastore in a workspace. The datastore contains the following files:

/data/2018/Q1.csv

/data/2018/Q2.csv

/data/2018/Q3.csv

/data/2018/Q4.csv

/data/2019/Q1.csv

All files store data in the following format:

id,f1,f2,I

1,1,2,0

2,1,1,1

3,2,1,0

4,2,2,1

You run the following code:

You need to create a dataset named training_data and load the data from all files into a single data frame by using the following code:

Solution: Run the following code:

Does the solution meet the goal? A. Yes

B. No Answer: A

Explanation:

Use two file paths.

Use Dataset.Tabular_from_delimeted as the data isn't cleansed.

Note:

A TabularDataset represents data in a tabular format by parsing the provided file or list of files. This provides you with the ability to materialize the data into a pandas or Spark DataFrame so you can work with familiar data preparation and training libraries without having to leave your notebook. You can create a TabularDataset object from .csv, .tsv, .parquet, .jsonl files, and from SQL query results.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-register-datasets 7 6 5 QUESTION 197

You plan to use the Hyperdrive feature of Azure Machine Learning to determine the optimal hyperparameter values when training a model.

You must use Hyperdrive to try combinations of the following hyperparameter values:

- learning_rate: any value between 0.001 and 0.1

- batch_size: 16, 32, or 64

You need to configure the search space for the Hyperdrive experiment.

Which two parameter expressions should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. a choice expression for learning_rate

B. a uniform expression for learning_rate

C. a normal expression for batch_size

D. a choice expression for batch_size

E. a uniform expression for batch_size Answer: BD

Explanation:

B: Continuous hyperparameters are specified as a distribution over a continuous range of values.

Supported distributions include:

uniform(low, high) - Returns a value uniformly distributed between low and high

D: Discrete hyperparameters are specified as a choice among discrete values. choice can be:

one or more comma-separated values

a range object

any arbitrary list object

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-tune-hyperparameters 19 8 QUESTION 198

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You train a classification model by using a logistic regression algorithm.

You must be able to explain the model's predictions by calculating the importance of each feature, both as an overall global relative importance value and as a measure of local importance for a specific set of predictions.

You need to create an explainer that you can use to retrieve the required global and local feature importance values.

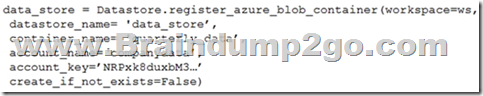

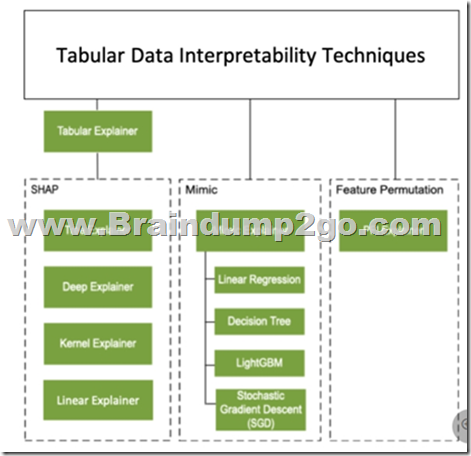

Solution: Create a MimicExplainer.

Does the solution meet the goal? A. Yes

B. No Answer: B

Explanation:

Instead use Permutation Feature Importance Explainer (PFI).

Note 1: Mimic explainer is based on the idea of training global surrogate models to mimic blackbox models.

A global surrogate model is an intrinsically interpretable model that is trained to approximate the predictions of any black box model as accurately as possible. Data scientists can interpret the surrogate model to draw conclusions about the black box model.

Note 2: Permutation Feature Importance Explainer (PFI): Permutation Feature Importance is a technique used to explain classification and regression models. At a high level, the way it works is by randomly shuffling data one feature at a time for the entire dataset and calculating how much the performance metric of interest changes. The larger the change, the more important that feature is. PFI can explain the overall behavior of any underlying model but does not explain individual predictions.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability 11 10 9 QUESTION 199

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You train a classification model by using a logistic regression algorithm.

You must be able to explain the model's predictions by calculating the importance of each feature, both as an overall global relative importance value and as a measure of local importance for a specific set of predictions.

You need to create an explainer that you can use to retrieve the required global and local feature importance values.

Solution: Create a TabularExplainer.

Does the solution meet the goal? A. Yes

B. No Answer: B

Explanation:

Instead use Permutation Feature Importance Explainer (PFI).

Note 1:

Note 2: Permutation Feature Importance Explainer (PFI): Permutation Feature Importance is a technique used to explain classification and regression models. At a high level, the way it works is by randomly shuffling data one feature at a time for the entire dataset and calculating how much the performance metric of interest changes. The larger the change, the more important that feature is. PFI can explain the overall behavior of any underlying model but does not explain individual predictions.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability 11 10 9 QUESTION 200

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You train a classification model by using a logistic regression algorithm.

You must be able to explain the model's predictions by calculating the importance of each feature, both as an overall global relative importance value and as a measure of local importance for a specific set of predictions.

You need to create an explainer that you can use to retrieve the required global and local feature importance values.

Solution: Create a PFIExplainer.

Does the solution meet the goal? A. Yes

B. No Answer: A

Explanation:

Permutation Feature Importance Explainer (PFI): Permutation Feature Importance is a technique used to explain classification and regression models. At a high level, the way it works is by randomly shuffling data one feature at a time for the entire dataset and calculating how much the performance metric of interest changes. The larger the change, the more important that feature is. PFI can explain the overall behavior of any underlying model but does not explain individual predictions.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability 11 10 9 QUESTION 201

You create a machine learning model by using the Azure Machine Learning designer. You publish the model as a real-time service on an Azure Kubernetes Service (AKS) inference compute cluster. You make no change to the deployed endpoint configuration.

You need to provide application developers with the information they need to consume the endpoint.

Which two values should you provide to application developers? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. The name of the AKS cluster where the endpoint is hosted.

B. The name of the inference pipeline for the endpoint.

C. The URL of the endpoint.

D. The run ID of the inference pipeline experiment for the endpoint.

E. The key for the endpoint. Answer: CE

Explanation:

Deploying an Azure Machine Learning model as a web service creates a REST API endpoint. You can send data to this endpoint and receive the prediction returned by the model.

You create a web service when you deploy a model to your local environment, Azure Container Instances, Azure Kubernetes Service, or field-programmable gate arrays (FPGA). You retrieve the URI used to access the web service by using the Azure Machine Learning SDK. If authentication is enabled, you can also use the SDK to get the authentication keys or tokens.

Example:

# URL for the web service

scoring_uri = '<your web service URI>'

# If the service is authenticated, set the key or token

key = '<your key or token>'

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-consume-web-service 12 QUESTION 202

You use Azure Machine Learning designer to create a training pipeline for a regression model.

You need to prepare the pipeline for deployment as an endpoint that generates predictions asynchronously for a dataset of input data values.

What should you do? A. Clone the training pipeline.

B. Create a batch inference pipeline from the training pipeline.

C. Create a real-time inference pipeline from the training pipeline.

D. Replace the dataset in the training pipeline with an Enter Data Manually module. Answer: C

Explanation:

You must first convert the training pipeline into a real-time inference pipeline. This process removes training modules and adds web service inputs and outputs to handle requests.

Incorrect Answers:

A: Use the Enter Data Manually module to create a small dataset by typing values.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/tutorial-designer-automobile-price-deploy 13

https://docs.microsoft.com/en-us/azure/machine-learning/algorithm-module-reference/enter-data-manually 14 QUESTION 203

You retrain an existing model.

You need to register the new version of a model while keeping the current version of the model in the registry.

What should you do? A. Register a model with a different name from the existing model and a custom property named version with the value 2.

B. Register the model with the same name as the existing model.

C. Save the new model in the default datastore with the same name as the existing model. Do not register the new model.

D. Delete the existing model and register the new one with the same name. Answer: B

Explanation:

Model version: A version of a registered model. When a new model is added to the Model Registry, it is added as Version 1. Each model registered to the same model name increments the version number.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/applications/mlflow/model-registry 15 QUESTION 204

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

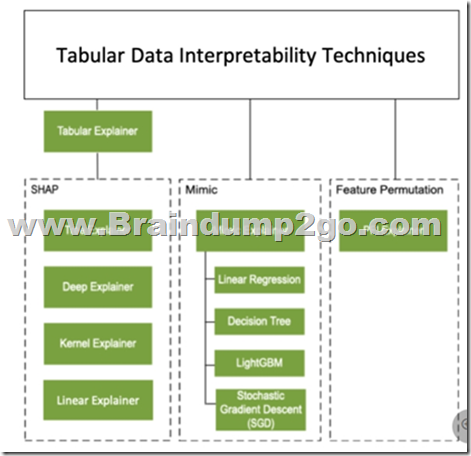

You create a model to forecast weather conditions based on historical data.

You need to create a pipeline that runs a processing script to load data from a datastore and pass the processed data to a machine learning model training script.

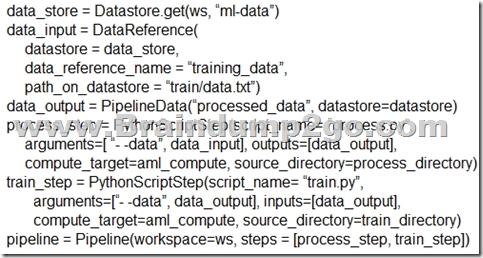

Solution: Run the following code:

Does the solution meet the goal? A. Yes

B. No Answer: A

Explanation:

The two steps are present: process_step and train_step

Data_input correctly references the data in the data store.

Note:

Data used in pipeline can be produced by one step and consumed in another step by providing a PipelineData object as an output of one step and an input of one or more subsequent steps.

PipelineData objects are also used when constructing Pipelines to describe step dependencies. To specify that a step requires the output of another step as input, use a PipelineData object in the constructor of both steps.

For example, the pipeline train step depends on the process_step_output output of the pipeline process step:

from azureml.pipeline.core import Pipeline, PipelineData

from azureml.pipeline.steps import PythonScriptStep

datastore = ws.get_default_datastore()

process_step_output = PipelineData("processed_data", datastore=datastore) process_step = PythonScriptStep(script_name="process.py", arguments=["--data_for_train", process_step_output],

outputs=[process_step_output],

compute_target=aml_compute,

source_directory=process_directory)

train_step = PythonScriptStep(script_name="train.py",

arguments=["--data_for_train", process_step_output],

inputs=[process_step_output],

compute_target=aml_compute,

source_directory=train_directory)

pipeline = Pipeline(workspace=ws, steps=[process_step, train_step]) Reference:

https://docs.microsoft.com/en-us/python/api/azureml-pipeline-core/azureml.pipeline.core.pipelinedata?view=azure-ml-py 16 QUESTION 205

You run an experiment that uses an AutoMLConfig class to define an automated machine learning task with a maximum of ten model training iterations. The task will attempt to find the best performing model based on a metric named accuracy.

You submit the experiment with the following code:

You need to create Python code that returns the best model that is generated by the automated machine learning task.

Which code segment should you use? A. best_model = automl_run.get_details()

B. best_model = automl_run.get_metrics()

C. best_model = automl_run.get_file_names()[1]

D. best_model = automl_run.get_output()[1] Answer: D

Explanation:

The get_output method returns the best run and the fitted model.

Reference:

https://notebooks.azure.com/azureml/projects/azureml-getting-started/html/how-to-use-azureml/automated-machine-learning/classification/auto-ml-classification.ipynb 17 QUESTION 206

You use the Azure Machine Learning SDK to run a training experiment that trains a classification model and calculates its accuracy metric.

The model will be retrained each month as new data is available.

You must register the model for use in a batch inference pipeline.

You need to register the model and ensure that the models created by subsequent retraining experiments are registered only if their accuracy is higher than the currently registered model.

What are two possible ways to achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point. A. Specify a different name for the model each time you register it.

B. Register the model with the same name each time regardless of accuracy, and always use the latest version of the model in the batch inferencing pipeline.

C. Specify the model framework version when registering the model, and only register subsequent models if this value is higher.

D. Specify a property named accuracy with the accuracy metric as a value when registering the model, and only register subsequent models if their accuracy is higher than the accuracy property value of the currently registered model.

E. Specify a tag named accuracy with the accuracy metric as a value when registering the model, and only register subsequent models if their accuracy is higher than the accuracy tag value of the currently registered model. Answer: CE

Explanation:

E: Using tags, you can track useful information such as the name and version of the machine learning library used to train the model. Note that tags must be alphanumeric.

Reference:

https://notebooks.azure.com/xavierheriat/projects/azureml-getting-started/html/how-to-use-azureml/deployment/register-model-create-image-deploy-service/register-model-create-image-deploy-service.ipynb 18 QUESTION 207

You plan to use the Hyperdrive feature of Azue Machine Learning to determine the optimal hyperparameter values when training a model.

You must use Hyperdrive to try combinations of the following hyperparameter values. You must not apply an early termination policy.

- learning_rate: any value between 0.001 and 0.1

- batch_size: 16, 32, or 64

You need to configure the sampling method for the Hyperdrive experiment.

Which two sampling methods can you use? Each correct answer is a complete solution.

NOTE: Each correct selection is worth one point. A. No sampling

B. Grid sampling

C. Bayesian sampling

D. Random sampling Answer: CD

Explanation:

C: Bayesian sampling is based on the Bayesian optimization algorithm and makes intelligent choices on the hyperparameter values to sample next. It picks the sample based on how the previous samples performed, such that the new sample improves the reported primary metric.

Bayesian sampling does not support any early termination policy Example:

from azureml.train.hyperdrive import BayesianParameterSampling from azureml.train.hyperdrive import uniform, choice

param_sampling = BayesianParameterSampling( {

"learning_rate": uniform(0.05, 0.1),

"batch_size": choice(16, 32, 64, 128)

}

)

D: In random sampling, hyperparameter values are randomly selected from the defined search space.

Random sampling allows the search space to include both discrete and continuous hyperparameters.

Incorrect Answers:

B: Grid sampling can be used if your hyperparameter space can be defined as a choice among discrete values and if you have sufficient budget to exhaustively search over all values in the defined search space.

Additionally, one can use automated early termination of poorly performing runs, which reduces wastage of resources.

Example, the following space has a total of six samples:

from azureml.train.hyperdrive import GridParameterSampling from azureml.train.hyperdrive import choice

param_sampling = GridParameterSampling( {

"num_hidden_layers": choice(1, 2, 3),

"batch_size": choice(16, 32)

}

)

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-tune-hyperparameters 19 8 QUESTION 208

You are training machine learning models in Azure Machine Learning. You use Hyperdrive to tune the hyperparameter.

In previous model training and tuning runs, many models showed similar performance.

You need to select an early termination policy that meets the following requirements:

- accounts for the performance of all previous runs when evaluating the current run

- avoids comparing the current run with only the best performing run to date

Which two early termination policies should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. Median stopping

B. Bandit

C. Default

D. Truncation selection Answer: AC

Explanation:

The Median Stopping policy computes running averages across all runs and cancels runs whose best performance is worse than the median of the running averages.

If no policy is specified, the hyperparameter tuning service will let all training runs execute to completion.

Incorrect Answers:

B: BanditPolicy defines an early termination policy based on slack criteria, and a frequency and delay interval for evaluation.

The Bandit policy takes the following configuration parameters:

slack_factor: The amount of slack allowed with respect to the best performing training run. This factor specifies the slack as a ratio.

D: The Truncation selection policy periodically cancels the given percentage of runs that rank the lowest for their performance on the primary metric. The policy strives for fairness in ranking the runs by accounting for improving model performance with training time. When ranking a relatively young run, the policy uses the corresponding (and earlier) performance of older runs for comparison. Therefore, runs aren't terminated for having a lower performance because they have run for less time than other runs.

Reference:

https://docs.microsoft.com/en-us/python/api/azureml-train-core/azureml.train.hyperdrive.medianstoppingpolicy 20

https://docs.microsoft.com/en-us/python/api/azureml-train-core/azureml.train.hyperdrive.truncationselectionpolicy 21

https://docs.microsoft.com/en-us/python/api/azureml-train-core/azureml.train.hyperdrive.banditpolicy 22 QUESTION 209

You are a data scientist working for a hotel booking website company. You use the Azure Machine Learning service to train a model that identifies fraudulent transactions.

You must deploy the model as an Azure Machine Learning real-time web service using the Model.deploy method in the Azure Machine Learning SDK. The deployed web service must return real-time predictions of fraud based on transaction data input.

You need to create the script that is specified as the entry_script parameter for the InferenceConfig class used to deploy the model.

What should the entry script do? A. Register the model with appropriate tags and properties.

B. Create a Conda environment for the web service compute and install the necessary Python packages.

C. Load the model and use it to predict labels from input data.

D. Start a node on the inference cluster where the web service is deployed.

E. Specify the number of cores and the amount of memory required for the inference compute. Answer: C

Explanation:

The entry script receives data submitted to a deployed web service and passes it to the model. It then takes the response returned by the model and returns that to the client. The script is specific to your model.

It must understand the data that the model expects and returns.

The two things you need to accomplish in your entry script are:

Loading your model (using a function called init())

Running your model on input data (using a function called run()) Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-deploy-and-where 23 QUESTION 210

You develop and train a machine learning model to predict fraudulent transactions for a hotel booking website.

Traffic to the site varies considerably. The site experiences heavy traffic on Monday and Friday and much lower traffic on other days. Holidays are also high web traffic days.

You need to deploy the model as an Azure Machine Learning real-time web service endpoint on compute that can dynamically scale up and down to support demand.

Which deployment compute option should you use? A. attached Azure Databricks cluster

B. Azure Container Instance (ACI)

C. Azure Kubernetes Service (AKS) inference cluster

D. Azure Machine Learning Compute Instance

E. attached virtual machine in a different region Answer: D

Explanation:

Azure Machine Learning compute cluster is a managed-compute infrastructure that allows you to easily create a single or multi-node compute. The compute is created within your workspace region as a resource that can be shared with other users in your workspace. The compute scales up automatically when a job is submitted, and can be put in an Azure Virtual Network.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-sdk 24 QUESTION 211

You use the Azure Machine Learning SDK in a notebook to run an experiment using a script file in an experiment folder.

The experiment fails.

You need to troubleshoot the failed experiment.

What are two possible ways to achieve this goal? Each correct answer presents a complete solution. A. Use the get_metrics() method of the run object to retrieve the experiment run logs.

B. Use the get_details_with_logs() method of the run object to display the experiment run logs.

C. View the log files for the experiment run in the experiment folder.

D. View the logs for the experiment run in Azure Machine Learning studio.

E. Use the get_output() method of the run object to retrieve the experiment run logs. Answer: BD

Explanation:

Use get_details_with_logs() to fetch the run details and logs created by the run.

You can monitor Azure Machine Learning runs and view their logs with the Azure Machine Learning studio.

Incorrect Answers:

A: You can view the metrics of a trained model using run.get_metrics().

E: get_output() gets the output of the step as PipelineData.

Reference:

https://docs.microsoft.com/en-us/python/api/azureml-pipeline-core/azureml.pipeline.core.steprun 25

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-monitor-view-training-logs 26 QUESTION 212

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

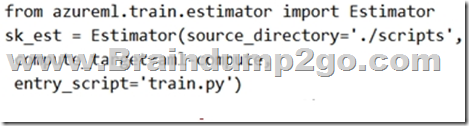

You have a Python script named train.py in a local folder named scripts. The script trains a regression model by using scikit-learn. The script includes code to load a training data file which is also located in the scripts folder.

You must run the script as an Azure ML experiment on a compute cluster named aml-compute.

You need to configure the run to ensure that the environment includes the required packages for model training. You have instantiated a variable named aml-compute that references the target compute cluster.

Solution: Run the following code:

Does the solution meet the goal? A. Yes

B. No Answer: B

Explanation:

There is a missing line: conda_packages=['scikit-learn'], which is needed.

Correct example:

sk_est = Estimator(source_directory='./my-sklearn-proj',

script_params=script_params,

compute_target=compute_target,

entry_script='train.py',

conda_packages=['scikit-learn'])

Note:

The Estimator class represents a generic estimator to train data using any supplied framework.

This class is designed for use with machine learning frameworks that do not already have an Azure Machine Learning pre-configured estimator. Pre-configured estimators exist for Chainer, PyTorch, TensorFlow, and SKLearn.

Example:

from azureml.train.estimator import Estimator

script_params = {

# to mount files referenced by mnist dataset

'--data-folder': ds.as_named_input('mnist').as_mount(),

'--regularization': 0.8

}

Reference:

https://docs.microsoft.com/en-us/python/api/azureml-train-core/azureml.train.estimator.estimator 27

Resources From:1.2020 Latest Braindump2go DP-100 Exam Dumps (PDF & VCE) Free Share:

https://www.braindump2go.com/dp-100.html 2.2020 Latest Braindump2go DP-100 PDF and DP-100 VCE Dumps Free Share:

https://drive.google.com/drive/folders/1GRXSnO2A4MYVb3Cfs4F_07l9l9k9_LAD?usp=sharing 3.2020 Free Braindump2go DP-100 PDF Download:

https://www.braindump2go.com/free-online-pdf/DP-100-PDF(191-205).pdf

https://www.braindump2go.com/free-online-pdf/DP-100-PDF-Dumps(179-190).pdf

https://www.braindump2go.com/free-online-pdf/DP-100-VCE(206-216).pdf

https://www.braindump2go.com/free-online-pdf/DP-100-VCE-Dumps(217-229).pdf Free Resources from Braindump2go,We Devoted to Helping You 100% Pass All Exams!

|