[July-2023]Free Braindump2go DP-203 PDF Dumps Free DP-203 155Q Download[Q68-Q105]

July/2023 Latest Braindump2go DP-203 Exam Dumps with PDF and VCE Free Updated Today! Following are some new Braindump2go DP-203 Real Exam Questions! QUESTION 68

You have an Azure Data Lake Storage account that has a virtual network service endpoint configured.

You plan to use Azure Data Factory to extract data from the Data Lake Storage account. The data will then be loaded to a data warehouse in Azure Synapse Analytics by using PolyBase.

Which authentication method should you use to access Data Lake Storage? A. shared access key authentication

B. managed identity authentication

C. account key authentication

D. service principal authentication Answer: B

Explanation:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-sql-data-warehouse#use-polybase-to-load-data-into-azure-sql-data-warehouse 1 QUESTION 69

You plan to create a dimension table in Azure Synapse Analytics that will be less than 1 GB.

You need to create the table to meet the following requirements:

- Provide the fastest Query time.

- Minimize data movement during queries.

Which type of table should you use? A. hash distributed

B. heap

C. replicated

D. round-robin Answer: C

Explanation:

A replicated table has a full copy of the table accessible on each Compute node. Replicating a table removes the need to transfer data among Compute nodes before a join or aggregation. Since the table has multiple copies, replicated tables work best when the table size is less than 2 GB compressed. 2 GB is not a hard limit.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/design-guidance-for-replicated-tables 2 QUESTION 70

Case Study 1 - Contoso, Ltd

Overview

Contoso, Ltd. is a clothing retailer based in Seattle. The company has 2,000 retail stores across the United States and an emerging online presence.

The network contains an Active Directory forest named contoso.com. The forest it integrated with an Azure Active Directory (Azure AD) tenant named contoso.com. Contoso has an Azure subscription associated to the contoso.com Azure AD tenant.

Existing Environment

Transactional Data

Contoso has three years of customer, transactional, operational, sourcing, and supplier data comprised of 10 billion records stored across multiple on-premises Microsoft SQL Server servers. The SQL Server instances contain data from various operational systems. The data is loaded into the instances by using SQL Server Integration Services (SSIS) packages.

You estimate that combining all product sales transactions into a company-wide sales transactions dataset will result in a single table that contains 5 billion rows, with one row per transaction.

Most queries targeting the sales transactions data will be used to identify which products were sold in retail stores and which products were sold online during different time periods. Sales transaction data that is older than three years will be removed monthly.

You plan to create a retail store table that will contain the address of each retail store. The table will be approximately 2 MB. Queries for retail store sales will include the retail store addresses.

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The table will be approximately 5 GB.

Streaming Twitter Data

The ecommerce department at Contoso develops an Azure logic app that captures trending Twitter feeds referencing the company's products and pushes the products to Azure Event Hubs.

Planned Changes and Requirements

Planned Changes

Contoso plans to implement the following changes:

Load the sales transaction dataset to Azure Synapse Analytics.

Integrate on-premises data stores with Azure Synapse Analytics by using SSIS packages.

Use Azure Synapse Analytics to analyze Twitter feeds to assess customer sentiments about products.

Sales Transaction Dataset Requirements

Contoso identifies the following requirements for the sales transaction dataset:

Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by month. Boundary values must belong to the partition on the right.

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

Implement a surrogate key to account for changes to the retail store addresses.

Ensure that data storage costs and performance are predictable.

Minimize how long it takes to remove old records.

Customer Sentiment Analytics Requirements

Contoso identifies the following requirements for customer sentiment analytics:

Allow Contoso users to use PolyBase in an Azure Synapse Analytics dedicated SQL pool to query the content of the data records that host the Twitter feeds. Data must be protected by using row-level security (RLS). The users must be authenticated by using their own Azure AD credentials.

Maximize the throughput of ingesting Twitter feeds from Event Hubs to Azure Storage without purchasing additional throughput or capacity units.

Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files.

Ensure that the data store supports Azure AD-based access control down to the object level.

Minimize administrative effort to maintain the Twitter feed data records.

Purge Twitter feed data records that are older than two years.

Data Integration Requirements

Contoso identifies the following requirements for data integration:

Use an Azure service that leverages the existing SSIS packages to ingest on-premises data into datasets stored in a dedicated SQL pool of Azure Synapse Analytics and transform the data.

Identify a process to ensure that changes to the ingestion and transformation activities can be version-controlled and developed independently by multiple data engineers

Hotspot Question

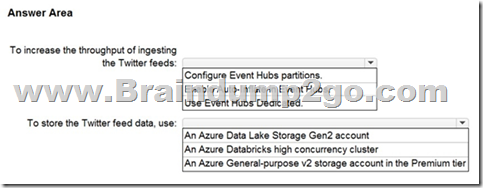

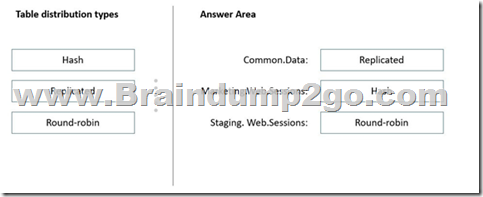

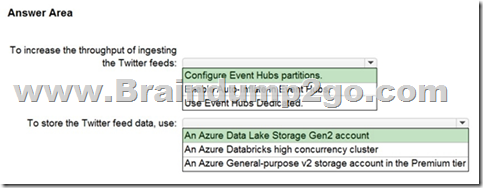

You need to design a data ingestion and storage solution for the Twitter feeds. The solution must meet the customer sentiment analytics requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

Box 1: Configure Evegent Hubs partitions

Scenario: Maximize the throughput of ingesting Twitter feeds from Event Hubs to Azure Storage without purchasing additional throughput or capacity units.

Event Hubs is designed to help with processing of large volumes of events. Event Hubs throughput is scaled by using partitions and throughput-unit allocations.

Box 2: An Azure Data Lake Storage Gen2 account

Scenario: Ensure that the data store supports Azure AD-based access control down to the object level.

Azure Data Lake Storage Gen2 implements an access control model that supports both Azure role-based access control (Azure RBAC) and POSIX-like access control lists (ACLs).

Reference:

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-features 3

https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-access-control 4 QUESTION 71

You have an Azure Synapse Analystics dedicated SQL pool that contains a table named Contacts.

Contacts contains a column named Phone.

You need to ensure that users in a specific role only see the last four digits of a phone number when querying the Phone column.

What should you include in the solution? A. a default value

B. dynamic data masking

C. row-level security (RLS)

D. column encryption

E. table partitions Answer: B

Explanation:

Dynamic data masking helps prevent unauthorized access to sensitive data by enabling customers to designate how much of the sensitive data to reveal with minimal impact on the application layer. It's a policy-based security feature that hides the sensitive data in the result set of a query over designated database fields, while the data in the database is not changed.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview 5 QUESTION 72

You develop data engineering solutions for a company.

A project requires the deployment of data to Azure Data Lake Storage.

You need to implement role-based access control (RBAC) so that project members can manage the Azure Data Lake Storage resources.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. Assign Azure AD security groups to Azure Data Lake Storage.

B. Configure end-user authentication for the Azure Data Lake Storage account.

C. Configure service-to-service authentication for the Azure Data Lake Storage account.

D. Create security groups in Azure Active Directory (Azure AD) and add project members.

E. Configure access control lists (ACL) for the Azure Data Lake Storage account. Answer: ADE

Explanation:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-secure-data 6 QUESTION 73

You are designing an Azure Synapse Analytics dedicated SQL pool.

You need to ensure that you can audit access to Personally Identifiable information (PII).

What should you include in the solution? A. dynamic data masking

B. row-level security (RLS)

C. sensitivity classifications

D. column-level security Answer: C

Explanation:

Data Discovery & Classification is built into Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics. It provides basic capabilities for discovering, classifying, labeling, and reporting the sensitive data in your databases.

Your most sensitive data might include business, financial, healthcare, or personal information. Discovering and classifying this data can play a pivotal role in your organization's information-protection approach. It can serve as infrastructure for:

Helping to meet standards for data privacy and requirements for regulatory compliance.

Various security scenarios, such as monitoring (auditing) access to sensitive data.

Controlling access to and hardening the security of databases that contain highly sensitive data.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/data-discovery-and-classification-overview 7 QUESTION 74

You are designing a sales transactions table in an Azure Synapse Analytics dedicated SQL pool. The table will contains approximately 60 million rows per month and will be partitioned by month. The table will use a clustered column store index and round-robin distribution. Approximately. How many rows will there be for each combination of distribution and partition? A. 1 million

B. 5 million

C. 20 million

D. 60 million Answer: D

Explanation:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition 8 QUESTION 75

You are designing a dimension table for a data warehouse. The table will track the value of the dimension attributes over time and preserve the history of the data by adding new rows as the data changes.

Which type of slowly changing dimension (SCD) should you use? A. Type 0

B. Type 1

C. Type 2

D. Type 3 Answer: C

Explanation:

Type 2 -Creating a new additional record. In this methodology all history of dimension changes is kept in the database. You capture attribute change by adding a new row with a new surrogate key to the dimension table. Both the prior and new rows contain as attributes the natural key(or other durable identifier). Also 'effective date' and 'current indicator' columns are used in this method. There could be only one record with current indicator set to 'Y'. For 'effective date' columns, i.e. start_date and end_date, the end_date for current record usually is set to value 9999-12-31. Introducing changes to the dimensional model in type 2 could be very expensive database operation so it is not recommended to use it in dimensions where a new attribute could be added in the future.

https://www.datawarehouse4u.info/SCD-Slowly-Changing-Dimensions.html 9 QUESTION 76

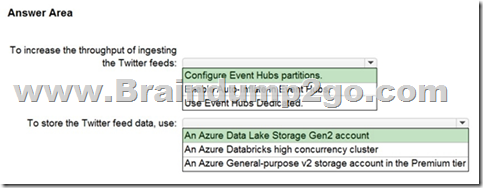

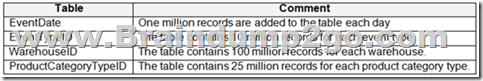

You are designing an inventory updates table in an Azure Synapse Analytics dedicated SQL pool.

The table will have a clustered columnstore index and will include the following columns:

You identify the following usage patterns:

- Analyst will most commonly analyze transactions for a warehouse.

- Queries will summarize by product category type, date, and/or inventory event type.

- You need to recommend a partition strategy for the table to minimize query times.

On which column should you recommend partitioning the table? A. ProductCategoryTypeID

B. EventDate

C. WarehouseID

D. EventTypeID Answer: C

Explanation:

The number of records for each warehouse is big enough for a good partitioning.

Note: Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created on a date column.

When creating partitions on clustered columnstore tables, it is important to consider how many rows belong to each partition. For optimal compression and performance of clustered columnstore tables, a minimum of 1 million rows per distribution and partition is needed. Before partitions are created, dedicated SQL pool already divides each table into 60 distributed databases. QUESTION 77

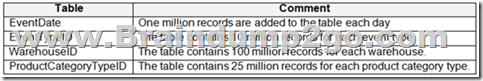

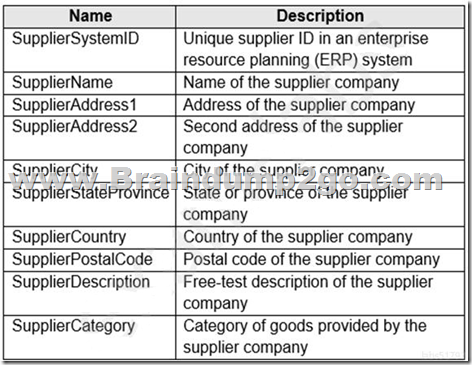

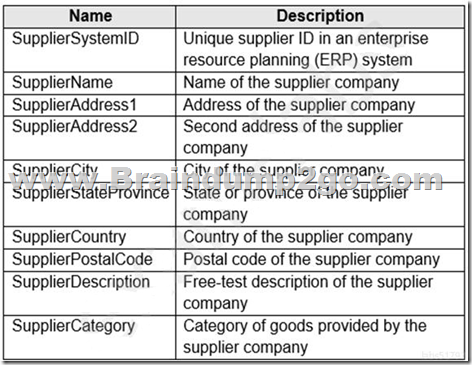

You are designing a slowly changing dimension (SCD) for supplier data in an Azure Synapse Analytics dedicated SQL pool.

You plan to keep a record of changes to the available fields.

The supplier data contains the following columns.

Which three additional columns should you add to the data to create a Type 2 SCD? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. surrogate primary key

B. foreign key

C. effective start date

D. effective end date

E. last modified date

F. business key Answer: ACD

Explanation:

Effective start date: This column should be used to store the date on which a particular record becomes effective. This will allow you to track the history of changes to the data over time.

Effective end date: This column should be used to store the date on which a particular record becomes no longer effective. This will allow you to track the history of changes to the data over time, and to easily identify the current valid record for each supplier.

Surrogate primary key: This column should be used as a unique identifier for each record in the SCD. It can be used to join the SCD to other tables, and to ensure that there are no duplicate records in the SCD. QUESTION 78

You plan to implement an Azure Data Lake Gen2 storage account.

You need to ensure that the data lake will remain available if a data center fails in the primary Azure region.

The solution must minimize costs.

Which type of replication should you use for the storage account? A. geo-redundant storage (GRS)

B. zone-redundant storage (ZRS)

C. locally-redundant storage (LRS)

D. geo-zone-redundant storage (GZRS) Answer: B

Explanation:

If a data center fails in the primary region, another datacenter in the same region could be a good solution and less expensive. QUESTION 79

You plan to ingest streaming social media data by using Azure Stream Analytics.

The data will be stored in files in Azure Data Lake Storage, and then consumed by using Azure Datiabricks and PolyBase in Azure Synapse Analytics.

You need to recommend a Stream Analytics data output format to ensure that the queries from Databricks and PolyBase against the files encounter the fewest possible errors.

The solution must ensure that the tiles can be queried quickly and that the data type information is retained.

What should you recommend? A. Parquet

B. Avro

C. CSV

D. JSON Answer: A

Explanation:

Avro schema definitions are JSON records. Polybase does not support JSON so why supporting Avro then. A CSV does not contain the schema as it is everything marked as string. so only parquet is left to choose. QUESTION 80

You have an Azure Data Lake Storage Gen2 container that contains 100 TB of data.

You need to ensure that the data in the container is available for read workloads in a secondary region if an outage occurs in the primary region. The solution must minimize costs.

Which type of data redundancy should you use? A. zone-redundant storage (ZRS)

B. read-access geo-redundant storage (RA-GRS)

C. locally-redundant storage (LRS)

D. geo-redundant storage (GRS) Answer: D

Explanation:

The difference between GRS and RA GRS is fairly simple, GRS only allows to be read in the secondary zone in the even of a failover from the primary to secondary while RA GRS allows the option to read in the secondary whenever. QUESTION 81

You have an Azure Synapse Analytics dedicated SQL Pool1. Pool1 contains a partitioned fact table named dbo.Sales and a staging table named stg.Sales that has the matching table and partition definitions.

You need to overwrite the content of the first partition in dbo.Sales with the content of the same partition in stg.Sales. The solution must minimize load times.

What should you do? A. Switch the first partition from dbo.Sales to stg.Sales.

B. Switch the first partition from stg.Sales to dbo. Sales.

C. Update dbo.Sales from stg.Sales.

D. Insert the data from stg.Sales into dbo.Sales. Answer: B

Explanation:

The best option for overwriting the content of the first partition in dbo.Sales with the content of the same partition in stg.Sales and minimizing load times would be to switch the first partition from stg.Sales to dbo.Sales.

Switching partitions is a common approach to efficiently manage large tables in SQL Server. By using the ALTER TABLE SWITCH statement, it is possible to quickly move data between tables with minimal overhead. In this scenario, switching the first partition from stg.Sales to dbo.Sales will replace the data in the first partition of dbo.Sales with the data from the corresponding partition in stg.Sales. QUESTION 82

You are designing a partition strategy for a fact table in an Azure Synapse Analytics dedicated SQL pool. The table has the following specifications:

- Contain sales data for 20,000 products.

- Use hash distribution on a column named ProduclID,

- Contain 2.4 billion records for the years 20l9 and 2020.

Which number of partition ranges provides optimal compression and performance of the clustered columnstore index? A. 40

B. 240

C. 400

D. 2,400 Answer: B

Explanation:

Each partition should have around 1 millions records. Dedication SQL pools already have 60 partitions.

We have the formula: Records/(Partitions*60)= 1 million

Partitions= Records/(1 million * 60)

Partitions= 2.4 x 1,000,000,000/(1,000,000 * 60) = 40

Note: Having too many partitions can reduce the effectiveness of clustered columnstore indexes if each partition has fewer than 1 million rows. Dedicated SQL pools automatically partition your data into 60 databases. So, if you create a table with 100 partitions, the result will be 6000 partitions.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/best-practices-dedicated-sql-pool 10 QUESTION 83

You have an Azure Synapse Analytics serverless SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named storage1. The AllowedBlobpublicAccess porperty is disabled for storage1.

You need to create an external data source that can be used by Azure Active Directory (Azure AD) users to access storage1 from Pool1.

What should you create first? A. an external resource pool

B. a remote service binding

C. database scoped credentials

D. an external library Answer: C

Explanation:

User must have SELECT permission on an external table to read the data. External tables access underlying Azure storage using the database scoped credential defined in data source.

Note: A database scoped credential is a record that contains the authentication information that is required to connect to a resource outside SQL Server. Most credentials include a Windows user and password.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-external-tables 11

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-database-scoped-credential-transact-sql 12 QUESTION 84

You plan to implement an Azure Data Lake Storage Gen2 container that will contain CSV files.

The size of the files will vary based on the number of events that occur per hour.

File sizes range from 4.KB to 5 GB.

You need to ensure that the files stored in the container are optimized for batch processing.

What should you do? A. Compress the files.

B. Merge the files.

C. Convert the files to JSON

D. Convert the files to Avro. Answer: D

Explanation:

Avro supports batch and is very relevant for streaming.

Note: Avro is framework developed within Apache's Hadoop project. It is a row-based storage format which is widely used as a serialization process. AVRO stores its schema in JSON format making it easy to read and interpret by any program. The data itself is stored in binary format by doing it compact and efficient.

Reference:

https://www.adaltas.com/en/2020/07/23/benchmark-study-of-different-file-format/ 13 QUESTION 85

You have an Azure Factory instance named DF1 that contains a pipeline named PL1.PL1 includes a tumbling window trigger.

You create five clones of PL1. You configure each clone pipeline to use a different data source. You need to ensure that the execution schedules of the clone pipeline match the execution schedule of PL1.

What should you do? A. Add a new trigger to each cloned pipeline

B. Associate each cloned pipeline to an existing trigger.

C. Create a tumbling window trigger dependency for the trigger of PL1.

D. Modify the Concurrency setting of each pipeline. Answer: B QUESTION 86

You are planning a streaming data solution that will use Azure Databricks. The solution will stream sales transaction data from an online store. The solution has the following specifications:

- The output data will contain items purchased, quantity, line total sales amount, and line total tax amount.

- Line total sales amount and line total tax amount will be aggregated in Databricks.

- Sales transactions will never be updated. Instead, new rows will be added to adjust a sale.

You need to recommend an output mode for the dataset that will be processed by using Structured Streaming.

The solution must minimize duplicate data.

What should you recommend? A. Append

B. Update

C. Complete Answer: A

Explanation:

The "Append" output mode is appropriate when the output dataset is a set of new records and does not include any updates or deletions. It will only append new rows to the output dataset, which means there will be no duplicate data created as a result of the streaming data solution. Since the solution will never update existing rows, but rather add new rows, the "Append" mode is the best choice to meet the requirements. QUESTION 87

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has a additional DateTime column.

Does this meet the goal? A. Yes

B. No Answer: B

Explanation:

Instead use the derived column transformation to generate new columns in your data flow or to modify existing fields.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/data-flow-derived-column 15 14 QUESTION 88

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a data flow that contains a Derived Column transformation.

Does this meet the goal? A. Yes

B. No Answer: A

Explanation:

Use the derived column transformation to generate new columns in your data flow or to modify existing fields.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/data-flow-derived-column 15 14 QUESTION 89

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data.

Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Does this meet the goal? A. Yes

B. No Answer: B QUESTION 90

You have a C# application that process data from an Azure IoT hub and performs complex transformations.

You need to replace the application with a real-time solution. The solution must reuse as much code as possible from the existing application. A. Azure Databricks

B. Azure Event Grid

C. Azure Stream Analytics

D. Azure Data Factory Answer: C

Explanation:

Azure Stream Analytics on IoT Edge empowers developers to deploy near-real-time analytical intelligence closer to IoT devices so that they can unlock the full value of device-generated data. UDF are available in C# for IoT Edge jobs

Azure Stream Analytics on IoT Edge runs within the Azure IoT Edge framework. Once the job is created in Stream Analytics, you can deploy and manage it using IoT Hub.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-edge 16 QUESTION 91

You have several Azure Data Factory pipelines that contain a mix of the following types of activities.

* Wrangling data flow

* Notebook

* Copy

* jar

Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution NOTE: Each correct selection is worth one point. A. Azure HDInsight

B. Azure Databricks

C. Azure Machine Learning

D. Azure Data Factory

E. Azure Synapse Analytics Answer: DE

Explanation:

Notebook- azure databricks, managing activities in pipeline-datafactroy. QUESTION 92

You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account.

You need to output the count of tweets during the last five minutes every five minutes. Each tweet must only be counted once.

Which windowing function should you use? A. a five-minute Session window

B. a five-minute Sliding window

C. a five-minute Tumbling window

D. a five-minute Hopping window that has one-minute hop Answer: C

Explanation:

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions 17 QUESTION 93

You have an Azure Stream Analytics query.

The query returns a result set that contains 10,000 distinct values for a column named clusterID.

You monitor the Stream Analytics job and discover high latency.

You need to reduce the latency.

Which two actions should you perform? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point. A. Add a pass-through query.

B. Add a temporal analytic function.

C. Scale out the query by using PARTITION BY.

D. Convert the query to a reference query.

E. Increase the number of streaming units. Answer: CE

Explanation:

C: Scaling a Stream Analytics job takes advantage of partitions in the input or output. Partitioning lets you

divide data into subsets based on a partition key. A process that consumes the data (such as a Streaming

Analytics job) can consume and write different partitions in parallel, which increases throughput.

E: Streaming Units (SUs) represents the computing resources that are allocated to execute a Stream Analytics job. The higher the number of SUs, the more CPU and memory resources are allocated for your job.

This capacity lets you focus on the query logic and abstracts the need to manage the hardware to run your Stream Analytics job in a timely manner.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-parallelization 18

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-streaming-unit-consumption 19 QUESTION 94

You are designing a solution that will copy Parquet files stored in an Azure Blob storage account to an Azure Data Lake Storage Gen2 account.

The data will be loaded daily to the data lake and will use a folder structure of {Year}/{Month}/{Day}/.

You need to design a daily Azure Data Factory data load to minimize the data transfer between the two accounts.

Which two configurations should you include in the design? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. Delete the files in the destination before loading new data.

B. Filter by the last modified date of the source files.

C. Delete the source files after they are copied.

D. Specify a file naming pattern for the destination. Answer: BD

Explanation:

Copy only the daily files by using filtering.

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage 20 QUESTION 95

A company purchases IoT devices to monitor manufacturing machinery. The company uses an Azure IoT Hub to communicate with the IoT devices.

The company must be able to monitor the devices in real-time.

You need to design the solution.

What should you recommend? A. Azure Stream Analytics cloud job using Azure PowerShell

B. Azure Analysis Services using Azure Portal

C. Azure Data Factory instance using Azure Portal

D. Azure Analysis Services using Azure PowerShell Answer: A

Explanation:

Stream Analytics is a cost-effective event processing engine that helps uncover real-time insights from devices, sensors, infrastructure, applications and data quickly and easily. Monitor and manage Stream Analytics resources with Azure PowerShell cmdlets and powershell scripting that execute basic Stream Analytics tasks.

https://cloudblogs.microsoft.com/sqlserver/2014/10/29/microsoft-adds-iot-streaming-analytics-data-production-and-workflow-services-to-azure/ 21 QUESTION 96

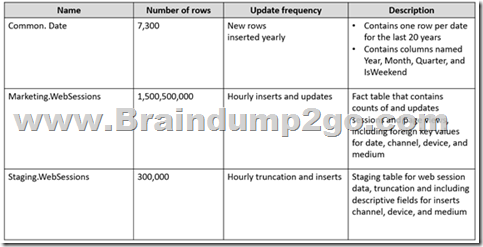

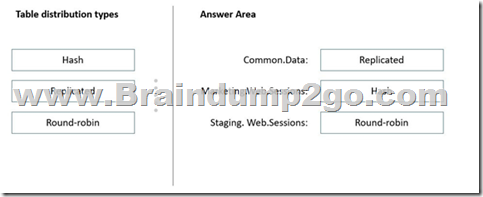

Drag and Drop Question

You have an Azure subscription.

You plan to build a data warehouse in an Azure Synapse Analytics dedicated SQL pool named pool1 that will contain staging tables and a dimensional model.

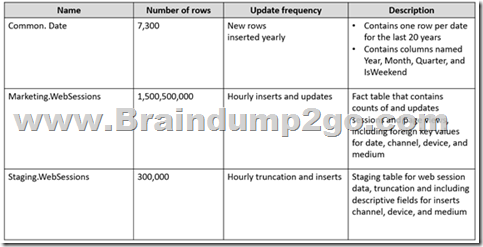

Pool1 will contain the following tables.

You need to design the table storage for pool1. The solution must meet the following requirements:

- Maximize the performance of data loading operations to Staging.WebSessions.

- Minimize query times for reporting queries against the dimensional model.

Which type of table distribution should you use for each table? To answer, drag the appropriate table distribution types to the correct tables. Each table distribution type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

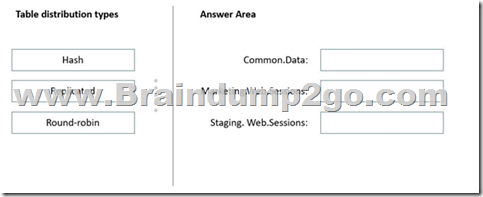

Answer:

Explanation:

Box 1: Replicated

The best table storage option for a small table is to replicate it across all the Compute nodes.

Box 2: Hash

Hash-distribution improves query performance on large fact tables.

Box 3: Round-robin

Round-robin distribution is useful for improving loading speed.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-distribute 22 QUESTION 97

You are designing an Azure Databricks interactive cluster. The cluster will be used infrequently and will be configured for auto-termination.

You need to ensure that the cluster configuration is retained indefinitely after the cluster is terminated. The solution must minimize costs.

What should you do? A. Clone the cluster after it is terminated.

B. Terminate the cluster manually when processing completes.

C. Create an Azure runbook that starts the cluster every 90 days.

D. Pin the cluster. Answer: D

Explanation:

To keep an interactive cluster configuration even after it has been terminated for more than 30 days, an administrator can pin a cluster to the cluster list.

References:

https://docs.azuredatabricks.net/clusters/clusters-manage.html#automatic-termination 23 QUESTION 98

You have an Azure Synapse Analytics job that uses Scala.

You need to view the status of the job.

What should you do? A. From Azure Monitor, run a Kusto query against the AzureDiagnostics table.

B. From Azure Monitor, run a Kusto query against the SparkLogying1 Event.CL table.

C. From Synapse Studio, select the workspace. From Monitor, select Apache Sparks applications.

D. From Synapse Studio, select the workspace. From Monitor, select SQL requests. Answer: C

Explanation:

Use Synapse Studio to monitor your Apache Spark applications. To monitor running Apache Spark application Open Monitor, then select Apache Spark applications. To view the details about the Apache Spark applications that are running, select the submitting Apache Spark application and view the details. If the

Apache Spark application is still running, you can monitor the progress.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/monitoring/apache-spark-applications 24 QUESTION 99

You configure monitoring for a Microsoft Azure SQL Data Warehouse implementation. The implementation uses PolyBase to load data from comma-separated value (CSV) files stored in Azure Data Lake Gen2 using an external table.

Files with an invalid schema cause errors to occur.

You need to monitor for an invalid schema error.

For which error should you monitor? A. EXTERNAL TABLE access failed due to internal error: 'Java exception raised on call to HdfsBridge_Connect: Error

[com.microsoft.polybase.client.KerberosSecureLogin] occurred while accessing external files.'

B. EXTERNAL TABLE access failed due to internal error: 'Java exception raised on call to HdfsBridge_Connect: Error [No FileSystem for scheme: wasbs] occurred while accessing external file.'

C. Cannot execute the query "Remote Query" against OLE DB provider "SQLNCLI11": for linked server "(null)", Query aborted-the maximum reject threshold (o rows) was reached while regarding from an external source: 1 rows rejected out of total 1 rows processed.

D. EXTERNAL TABLE access failed due to internal error: 'Java exception raised on call to HdfsBridge_Connect: Error [Unable to instantiate LoginClass] occurred while accessing external files.' Answer: C

Explanation:

Error message: Cannot execute the query "Remote Query"

Possible Reason:

The reason this error happens is because each file has different schema. The PolyBase external table DDL when pointed to a directory recursively reads all the files in that directory. When a column or data type mismatch happens, this error could be seen in SSMS.

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/polybase/polybase-errors-and-possible-solutions 25 QUESTION 100

You use Azure Data Lake Storage Gen2.

You need to ensure that workloads can use filter predicates and column projections to filter data at the time the data is read from disk.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. Reregister the Microsoft Data Lake Store resource provider.

B. Reregister the Azure Storage resource provider.

C. Create a storage policy that is scoped to a container.

D. Register the query acceleration feature.

E. Create a storage policy that is scoped to a container prefix filter. Answer: DE

Explanation:

To filter data at the time it is read from disk, you need to use the query acceleration feature of Azure Data Lake Storage Gen2. To enable this feature, you need to register the query acceleration feature in your Azure subscription.

In addition, you can use storage policies scoped to a container prefix filter to specify which files and directories in a container should be eligible for query acceleration. This can be used to optimize the performance of the queries by only considering a subset of the data in the container. QUESTION 101

You have an enterprise data warehouse in Azure Synapse Analytics named DW1 on a server named Server1.

You need to verify whether the size of the transaction log file for each distribution of DW1 is smaller than 160 GB.

What should you do? A. On the master database, execute a query against the sys.dm_pdw_nodes_os_performance_counters dynamic management view.

B. From Azure Monitor in the Azure portal, execute a query against the logs of DW1.

C. On DW1, execute a query against the sys.database_files dynamic management view.

D. Execute a query against the logs of DW1 by using the Get-AzOperationalInsightSearchResult PowerShell cmdlet. Answer: A

Explanation:

The following query returns the transaction log size on each distribution.

If one of the log files is reaching 160 GB, you should consider scaling up your instance or limiting your transaction size.

--Transaction log size

SELECT

instance_name as distribution_db,

cntr_value*1.0/1048576 as log_file_size_used_GB,

pdw_node_id

FROM sys.dm_pdw_nodes_os_performance_counters

WHERE

instance_name like 'Distribution_%'

AND counter_name = 'Log File(s) Used Size (KB)'

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-manage-monitor 26 QUESTION 102

You have a SQL pool in Azure Synapse.

A user reports that queries against the pool take longer than expected to complete.

You need to add monitoring to the underlying storage to help diagnose the issue.

Which two metrics should you monitor? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point. A. Cache used percentage

B. DWU Limit

C. Snapshot Storage Size

D. Active queries

E. Cache hit percentage Answer: AE

Explanation:

A: Cache used is the sum of all bytes in the local SSD cache across all nodes and cache capacity is the sum of the storage capacity of the local SSD cache across all nodes.

E: Cache hits is the sum of all columnstore segments hits in the local SSD cache and cache miss is the columnstore segments misses in the local SSD cache summed across all nodes

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-concept-resource-utilization-query-activity 27 QUESTION 103

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is more than 1 MB.

Does this meet the goal? A. Yes

B. No Answer: B

Explanation:

Instead modify the files to ensure that each row is less than 1 MB.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data 30 29 28 QUESTION 104

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is less than 1 MB.

Does this meet the goal? A. Yes

B. No Answer: A

Explanation:

When exporting data into an ORC File Format, you might get Java out-of-memory errors when there are large text columns. To work around this limitation, export only a subset of the columns.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data 30 29 28 QUESTION 105

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an enterprise data warehouse in Azure Synapse Analytics.

You need to prepare the files to ensure that the data copies quickly.

Solution: You convert the files to compressed delimited text files.

Does this meet the goal? A. Yes

B. No Answer: A

Explanation:

All file formats have different performance characteristics. For the fastest load, use compressed delimited text files.

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data 30 29 28

Resources From:1.2023 Latest Braindump2go DP-203 Exam Dumps (PDF & VCE) Free Share:

https://www.braindump2go.com/dp-203.html 2.2023 Latest Braindump2go DP-203 PDF and DP-203 VCE Dumps Free Share:

https://drive.google.com/drive/folders/1iYr0c-2LfLu8iev_F1XZJhK_LKXNTGhn?usp=sharing 3.2023 Free Braindump2go DP-203 Exam Questions Download:

https://www.braindump2go.com/free-online-pdf/DP-203-PDF-Dumps(68-105).pdf Free Resources from Braindump2go,We Devoted to Helping You 100% Pass All Exams!

|